At this stage in the web's magnificent story, getting and staying informed on the web has splintered into many products, and processes. Search engines match a user's

intent with content. Social bookmarking tools allow users to tag and share their information

consumption experience. Social networks have taken over the

dissemination of interesting and relevant information. This has resulted in content consumption islands. Mapping user intent to socially situated, relevant, interesting and experiential content seems like Utopia. We'll reach there when people, situated in social networks, actively map their search intent to their consumption experience, and expose it for others' benefit.

Ideas around this notion are surfacing through different names, with slight variations - some call it the a

database of intentions, some call it

content curation. I believe that for the web to continue to be a valuable and smooth experience, we the people - embedded in our networks, have to actively begin mapping intent to content. We thank Google for an excellent search service, and Facebook for mapping our network. We should use Google to create an annotated and filtered web of our intent for information, mapped to good content, and embed it into our networks on Facebook or Twitter.

Let me introduce this using an example:

Lets consider an intent among us to learn about the foods that affect moods, and how they do it.

i)

Matching intent to content: Using a search engine, we see:

ii)

Documenting information consumption: However, several hundreds of people have looked around for this topic, and have bookmarked useful links already on Delicious.

iii)

Dissemination through social networks: A casual reader, who discovers an interesting article on this topic, may share with his social network:

Now compare the above to a carefully chosen list of nuggets and links, I found relevant on this topic:

From a set of pages, I chose some useful nuggets that introduced many facts on the topic, and collated them together to make a report. The nuggets make it easier for readers to judge the quality of the content in the links.

Further, I can share this filtered list of documents - a jist of my information consumption experience, to the public world, or in my social network as

Foods affecting moods.

In this exercise, I mapped an intent, "foods affecting moods", to a list of documents, that have funneled through my experience. The mapping is then shared to my social network. This process is different and adds value to any of the experiences we normally go through:

i) When we use a search engine, we rely on the search engine's ranking, and the snippets it provides.

ii) We bookmark only individual pages on Delicious, do not document our intent (tags are too fine-grained), and do not attach good snippets. [Some social bookmarking tools like Diigo do allow selection of snippets from the pages].

iii) We share individual links on Twitter and Facebook, not a chosen lists of documents that are mapped to an intent.

Can we get to an intent-content web that looks like:

Each user (a node in this graph) publishes a set of chosen links and nuggets for his search intentions (shown as blips). We'll see such intent-content mappings proliferate through social networks when they become easy to create. The next time you want to know something, the best human chosen content will be right in your neighborhood.

Intent, and not content drives the web these days

The web has a tendency to bring about massive changes in culture, in rather subtle ways. One thing leads to another, one system exploits another, and very soon we are going down a path not comprehended. Here are some of my thoughts on the cause-effect cycles in the content-web:

- Hyperlinking makes it easy for content to be discovered and browsed.

- Content authoring takes off. Web sites are created that have hyperlinked content.

- Directories come up and organize the web's content into categories - to make the content explosion tractable.

- Search engines exploit the link structure, and bring relevance to search.

- Directories are not relevant any more. People use search engines for everything.

- Blogging software make content creation go through the roof.

- Spammers understand how search engines work, know most people only use search engines, and make use of blogging software to game them.

- Search engines perennially fight spam.

- Content advertising models provide payoffs for people with good content, and traffic.

- Spammers and marketers discover that writing "content" is the best way to attract search traffic.

- New businesses come up that analyse what people are searching for (intent), and write content to suit that. These are called content farms. Demand media is the biggest example. Here's a piece from Time on this phenomenon. I collated a reasonably comprehensive list of opinions on the topic of content farms.

We are living in a time when companies study our intent, write content tailor made to that, and then make money through ads. While this may not be as bad as it sounds, they are working symbiotically with search engines, and may often compromise on the quality of content that matches user intent. They mostly just do enough to get on top of the search engine listing, and get your click. This spiral will make search engines weaker, and affect our information consumption experience - unless we take it from here on our own!

The importance of us being good hubs

The amount of time and skill people have to write fantastic content probably follows a skewed normal distribution. This means that most of us are average writers. There are a few fantastic writers. There are plenty of bad writers. The fantastic writers command a huge readership. Page quality on the web will follow an even more skewed distribution - tending towards the power law. There are a few fantastically written pages. Many pages are average in their content. There are plenty of useless pages.

In stark contrast to our producing ability, most of us are excellent consumers - and have a great eye at identifying quality. If you realize that you spend more time consuming than producing, then it follows that you are a better consumer than a producer. Unfortunately, this ability has not left its footprint on the web. As discerning consumers, with the ability to identify good from bad, we have to document our consumption experience - and map intent to good content.

Recent trends in Web 2.0 are clearly directed at gathering more data from consumption experience. This started out with rating systems, and now we even have products that document our geographic visits. Facebook's recent announcements convert the whole web to be "likeable". We need to extend this to the intent-content mapping.

A good hub is one that points to good content. Being good hubs is just a natural extension of us putting our best skills (of consuming) to use!

Convinced? Want to get started with the intent-content web?

Mass adoption of an idea depends on extremely easy tools that facilitate its spread. We saw an explosion of content on the web when blogging tools took content authoring away from web masters, to common people. Good content started getting disseminated when social networks facilitated an organized mechanism for spreading. Users started telling their preferences when web sites made it easy to gather public opinions. The intent-content web is also looking for that spark in a tool.

I'd like to talk about Diigo, and Nuggetize (something I've been working on myself). These tools follow different approaches, but can contribute to the intent-content web.

- Diigo: Diigo is probably the most well-featured bookmarking tool available on the web. After installing their toolbar, you can not only bookmark and tag web sites, but also select snippets inside them that you found relevant. Diigo organizes these quite well into a library. Further, you can create lists - and group related links into a list. If you map your intent to a list, then all the useful content can be populated into it. You can share these lists with your networks, and help others benefit from your consumption experience. Some examples of Diigo lists are:

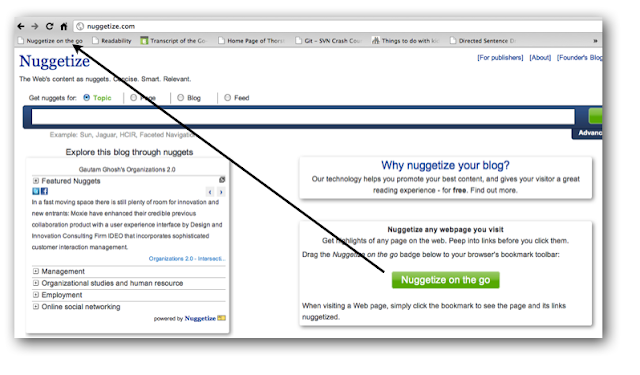

- Nuggetize: Nuggetize is designed to ease the process of choosing the right set of documents to read. Nuggetize mines interesting nuggets from web pages that match user intent, and organizes them into appropriate categories dynamically. It learns from user preferences and creates an intent-content report. A user can start with a query, and end with a list of chosen nuggets that lead to useful content on the topic. Nugget reports can easily be published into a social network, or incorporated into any blog. Some examples of Nugget reports are: